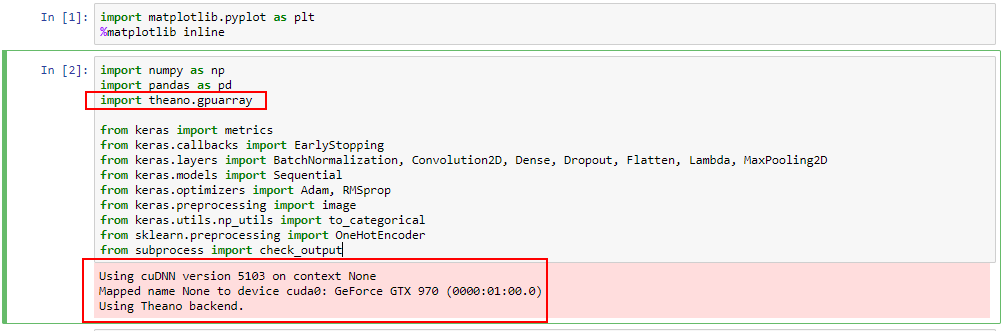

One of the biggest challenges with practicing deep learning is having a research environment to build, train, and test deep learning models. Building a deep learning capable personal computer or using a cloud-based development environment can be costly and/or time consuming to setup. This post is designed to help FastAI (https://course.fast.ai/about.html) learners utilize Google’s Colaboratory research tool.

Google Colaboratory (Colab) is an online research tool for machine learning. It is FREE and offers GPU/TPU hardware acceleration for training deep learning models.

Hardware acceleration can be changed in the Edit menu under Notebook Settings.

Google Colab FAQ (https://research.google.com/colaboratory/faq.html)

The FastAI courses taught by Jeremy Howard and Rachel Thomas are great learning resources for anyone that is interested in deep learning. (https://course.fast.ai/index.html)

Below is a link to a Google Colab Jupyter notebook. This notebook will setup the Google Colab runtime with all the necessary tools and libraries to build deep learning models reviewed in the FastAI training lessons.

https://colab.research.google.com/drive/1ppP7qds7VJfzfISMFynbR-t40OuEPbUS