When image matrices and their associated labels are stored in two separate matrices, it is often difficult to split the data into training and test sets randomly. Scikit-learn allows for splitting data into training and test sets relatively easy. For the example below, the data from the Statoil/C-CORE Iceberg Classifier Challenge Kaggle competition was used.

Initial setup:

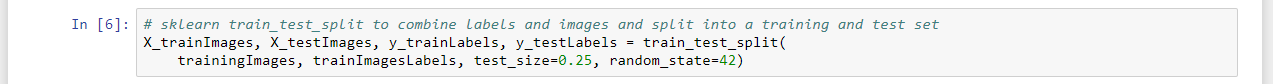

Splitting data into a train and test set: