After installing Ubuntu operating system, the following commands were executed in the terminal:

If operating system is earlier release than 16.04 LTS

sudo do-release-upgrade

--optional start

Install SSH Server in order to remote into the deep learning PC from another computer on the same network.

sudo apt-get install openssh-server -y

Verify SSH service is running.

sudo service ssh status

Identify network IP address for SSH client. A populate SSH client is Putty

ifconfig

--optional end

Install Tmux. Useful for operating multiple terminal windows from within the same SSH session. Google Tmux for more information

sudo apt-get install tmux

Update and reboot Ubuntu operating system

sudo apt-get update

sudo apt-get upgrade -y

sudo apt-get dist-upgrade -y

sudo reboot

Installing Anaconda for Ubuntu

cd /tmp

curl -O https://repo.continuum.io/archive/Anaconda3-4.4.0-Linux-x86_64.sh

bash Anaconda3-4.4.0-Linux-x86_64.sh

[enter] and [yes] to all

sudo reboot

After Anaconda is installed, configure Jupyter notebooks.

jupyter notebook --generate-config

[note config location]

jupyter notebook password

[enter password]

sudo nano /home/cnnpc/.jupyter/jupyter_notebook_config.py

Change #c.NotebookApp.ip = 'localhost' to c.NotebookApp.ip = '[your ip]'

Change #c.NotebookApp.port = 8888 to c.NotebookApp.port = [your port]

Create a Jupyter notebook directory in your Documents directory

cd Documents/

mkdir nbs

cd nbs

jupyter notebook

[verify and connect]

Install Nvidia repos and Cuda

cd /tmp

wget "http://developer.download.nvidia.com/compute/cuda/repos/ubuntu1604/x86_64/cuda-repo-ubuntu1604_8.0.44-1_amd64.deb" -O "cuda-repo-ubuntu1604_8.0.44-1_amd64.deb"

sudo dpkg -i cuda-repo-ubuntu1604_8.0.44-1_amd64.deb

sudo apt-get update

sudo apt-get -y install cuda

sudo reboot

sudo modprobe nvidia

Verify GPU is recognized

nvidia-smi

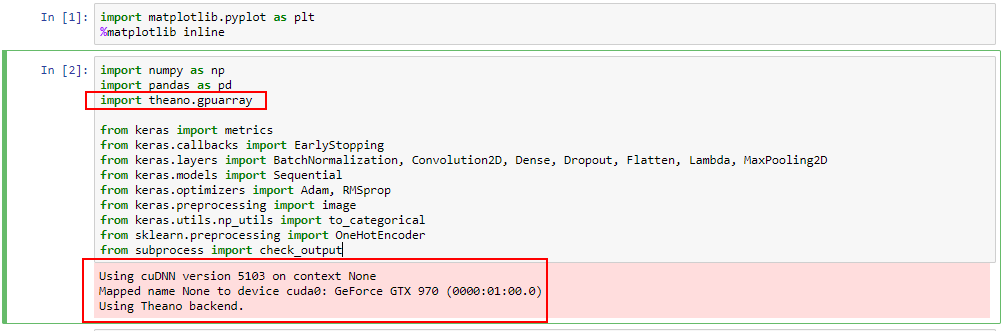

Install bcolz and pip.

conda install -y bcolz

sudo apt-get install python3-pip -y

Upgrade Anaconda modules

conda upgrade -y --all

Install Keras

pip install keras==1.2.2

Create Keras directory

mkdir ~/.keras

Create Keras json configuration file. (copy echo.. ..keras.json and paste into terminal and press enter)

echo '{

"image_dim_ordering": "th",

"epsilon": 1e-07,

"floatx": "float32",

"backend": "theano"

}' > ~/.keras/keras.json

Install Theano

pip3 install theano

Create Theano configuration file. (copy echo.. ..theanorc and paste into terminal and press enter)

echo "[global]

device = gpu

floatX = float32

[cuda]

root = /usr/local/cuda" > ~/.theanorc

Install Theano pygpu

conda install theano pygpu

Get fast.ai cudnn file, extract, and copy to appropriate directories

wget "http://files.fast.ai/files/cudnn.tgz" -O "cudnn.tgz"

tar -zxf cudnn.tgz

cd cuda

sudo cp lib64/* /usr/local/cuda/lib64/

sudo cp include/* /usr/local/cuda/include/

Install Glances. This is a great application for monitoring computer usage. (Including GPU usage)

curl -L https://bit.ly/glances | /bin/bashsudo